Amazon S3 to DynamoDB Automation

Export Object from S3 to DynamoDB using AWS Lambda

Amazon S3

Amazon DynamoDB

Amazon DynamoDB is a fully managed proprietary NoSQL database service that supports key–value and document data structures and is offered by Amazon.com as part of the Amazon Web Services portfolio. Hundreds of thousands of AWS customers have chosen DynamoDB as their key-value and document database for mobile, web, gaming, ad tech, IoT, and other applications that need low-latency data access at any scale. Create a new table for your application and let DynamoDB handle the rest.

Use Case

You might have a requirement to automate whenever a new JSON file is uploaded to S3 bucket then we need to keep the json object in the DynamoDB table. JSON Object cannot directly ingest to DynamoDB table but will have a logic in lambda.

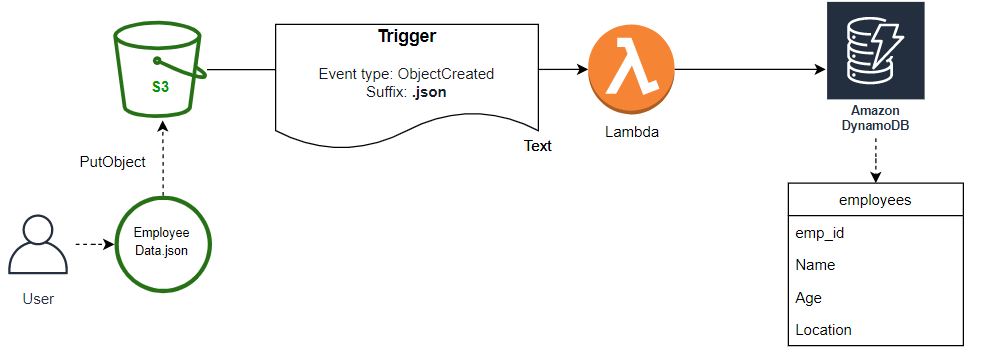

Architectural design

|

| Automation Workflow |

Workflow

- User/Application put the .json Object to S3 bucket

- Lambda will trigger when an .json Object is created in the given S3 bucket

- Lambda will convert and put the data in DynamoDB table

How to Setup this WorkFlow?

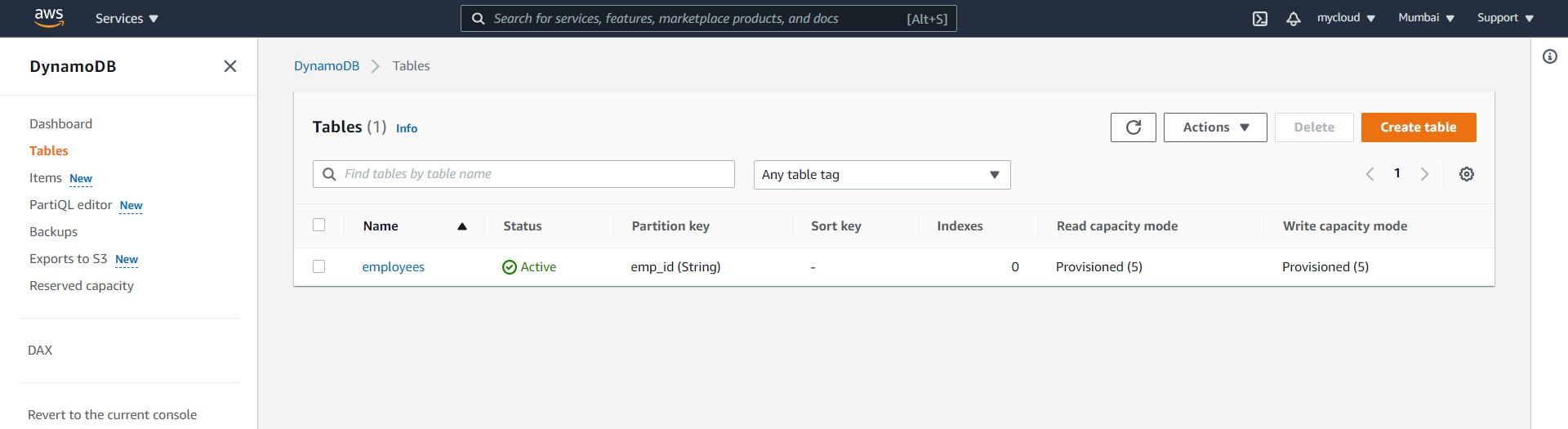

- Create the Dynamo DB table, for this blog, I have named it as "employees"

Note: If you want to change the DynamoDB table name then the same name should be passed in Lambda

- Create a S3 bucket for streaming your JSON objects

- Create Lambda function, lambda is written in python 3.x version, you can find the working code in below link:

https://github.com/prasannakumarkn/LambdaFunctions/blob/feature/s3-to-dynamodb

- Lambda IAM Role required below permission:

- S3 get object and DynamoDB put object permission

- AWSLambdaBasicExecutionRole (Managed policy)

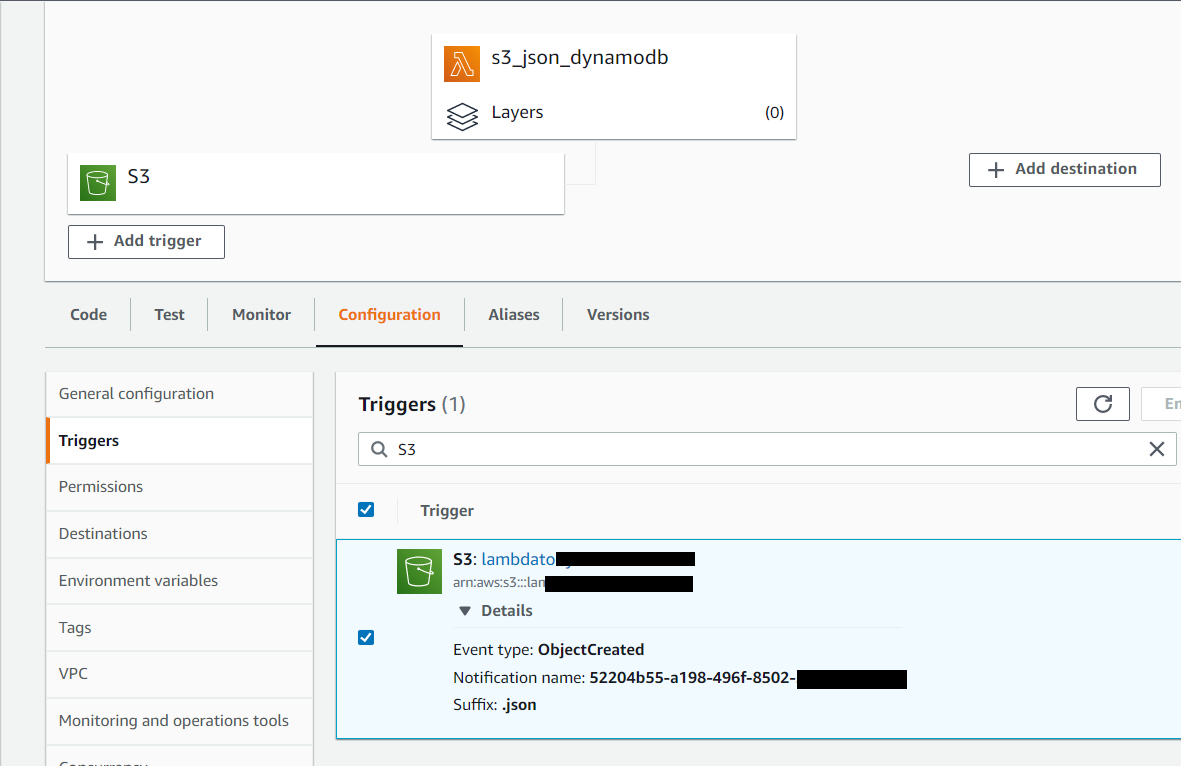

- To configure the Trigger, there are two ways, either we can configure in S3 bucket properties by creating an event notification or you can add the Trigger as S3 in lambda console.

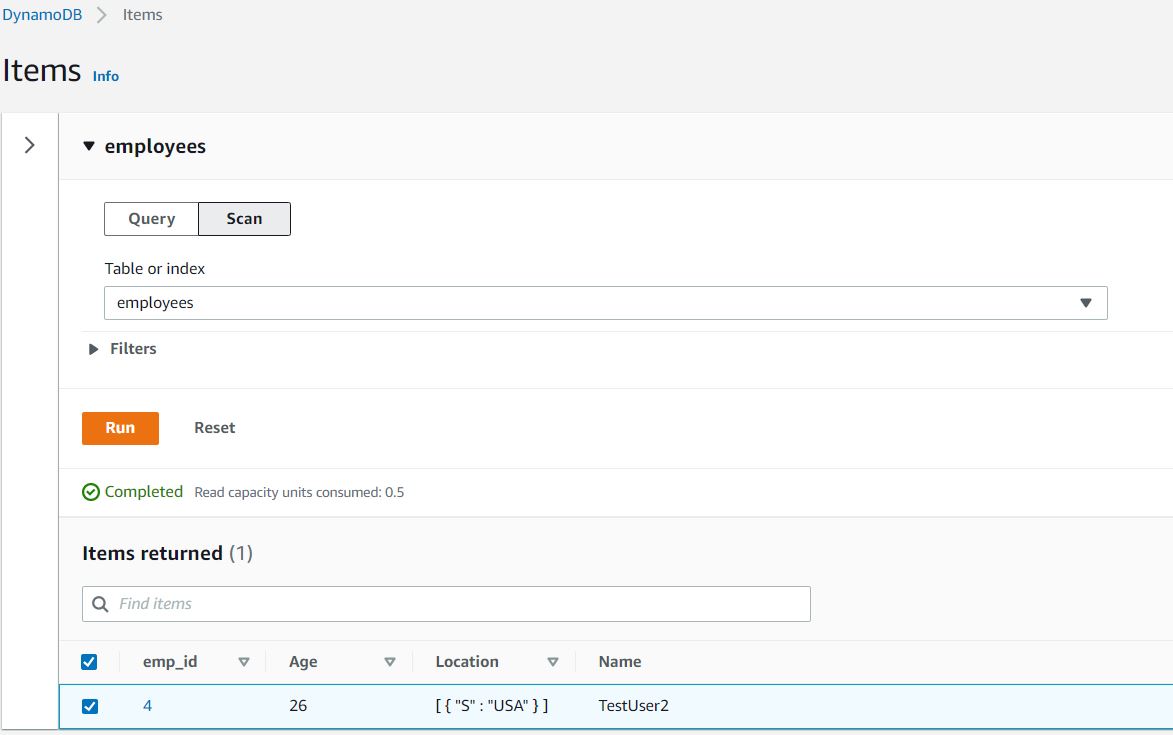

Testing

Upload a json object in the S3 bucket, and automatically that will put the object in NoSQL DynamoDB table.

- Uploaded below json object in S3 bucket

{ "emp_id":"4", "Name":"TestUser2", "Age":26, "Location":["USA"] }

Conclusion

As you can see with some very basic lambda code, we were able to move the data from S3 to DynamoDB table. Refer the boto3 documentation for proper syntax when you're configuring the individual python modules like boto3.

Thank you for reading!

Comments

Post a Comment