Deploy AWS infrastructure using Terraform and GitHub Actions

Terraform being an Infrastructure as a Code helps us to manage a lot of infrastructure for several platforms in a consistent manner. In this blog, I will walkthrough on deploying a terraform code using

Github actions

Terraform code to create S3 bucket

In this blog, I will walkthrough each blocks in terraform to create an S3 bucket in AWS in automated workflow using GitHub Actions.

Lets look into the Terraform files. To understand easily, I have created separate tf files for each purpose, but you can still use all in one tf file as per your convenient. You can use any name with tf extension, for better understanding I used following names.

-

versions.tf

<required_version> Specifies which versions of Terraform can be used with your configuration. Terraform 0.13 and later should be used in order to use the above code.terraform {required_version = ">= 0.14"required_providers {aws = {source = "hashicorp/aws"version = "3.36.0"}}backend "s3" {bucket = "prasanna-aws-demo-terraform-tfstate"key = "creates3.tfstate"region = "ap-south-1"dynamodb_table = "prasanna-aws-demo-terraform-tfstatelock"encrypt = true}}

<required_providers> This block specifies all of the providers required by the current module, mapping each local provider name to a source address and a version constraint.

<backend> This block is very useful in keeping the state files in a secured remote location. State file keeps the blue print of the state of Infrastructure. In this case, I have used one of the existing s3 bucket. To prevent two team members from writing to the state file at the same time, we will use a DynamoDB table lock. Terraform provides locking to prevent concurrent runs against the same state. Locking helps make sure that only one team member runs terraform configuration. Locking helps us prevent conflicts, data loss and state file corruption due to multiple runs on same state file.

-

providers.tf

Terraform relies on plugins called "providers" to interact with cloud providers, SaaS providers, etc. var.region is fetched from the variable which I will show you in the following.provider "aws" {region = var.region}

-

main.tf

resource "aws_s3_bucket" "prasanna_test" {bucket = var.s3_bucket_nameacl = var.acltags = merge ({Name = var.s3_bucket_nameEnvironment = var.Environment})}resource is actual resource we are creating. In this case we are using aws_s3_bucket as resource name which will create the s3 bucket with provided attributes.

-

variables.tf

Above are the variables required for our tf files.# common variablesvariable "s3_bucket_name" {description = "tags"type = stringdefault = "my-tf-s3-bucket-prasannakn"}variable "acl" {description = "access control list"type = stringdefault = "private"}variable "Environment" {description = "access control list"type = stringdefault = "Dev"}variable "region" {description = "region"type = stringdefault = "ap-south-1"}

Deploy Terraform code via Github Actions

We have the terraform code and lets deploy the code into AWS environment in an automated workflow using Github Actions.

As a pre-requisites, in order to deploy the resources in AWS, you will have to pass the user credential in Github secrets as shown below

As a pre-requisites, in order to deploy the resources in AWS, you will have to pass the user credential in Github secrets as shown below

Create a programmatical IAM user with S3 permission from AWS console, please refer AWS official doc for the steps to create an IAM

user. Add the secret keys and access secrets keys in GitHub secrets.

Lets look into the GitHub workflow. We have below two pipelines

- TerraformTest.yml - To validate the Terraform code which will trigger for every push irrespective of the branch

- TerraformApply.yml - To deploy the Terraform code which will trigger when Pull request is made on main branch

We have used two pipelines because, Each developers will be working in their own branch, so this approach will help when developer wants to validate the code before the Pull request and with this developer doesn't

require to validate in the local setup. This can be achieved from the first pipeline. Second pipeline will apply the changes in AWS after the code merge to main branch, so before the code going to main, we can validate

our infrastructure change.

Lets look into the both the pipelines workflow.

name: Terraform Test# Trigger the workflow on push to Test the Terraform codeon: pushjobs:terraform_test:runs-on: ubuntu-lateststeps:- uses: actions/checkout@v1- name: Install Terraformenv:TERRAFORM_VERSION: "1.0.11"run: |tf_version=$TERRAFORM_VERSIONwget https://releases.hashicorp.com/terraform/"$tf_version"/terraform_"$tf_version"_linux_amd64.zipunzip terraform_"$tf_version"_linux_amd64.zipsudo mv terraform /usr/local/bin/- name: Verify Terraform versionrun: terraform --version- name: Terraform initenv:AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}run: terraform init -input=false- name: Terraform validationrun: terraform validate- name: Terraform planenv:AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}run: terraform plan

This pipeline will checkout to the code in container and fetch the secrets from the Github secrets and will initialize the terraform and validate the code. Also at the end, we will look out the current and desired state using terraform plan. This pipeline will trigger on every push.

name: Terraform Applyon:# Trigger the workflow on push or Pull request,# but only for the main branchpush:branches:- mainjobs:terraform_apply:runs-on: ubuntu-lateststeps:- uses: actions/checkout@v1- name: Install Terraformenv:TERRAFORM_VERSION: "1.0.11"run: |tf_version=$TERRAFORM_VERSIONwget https://releases.hashicorp.com/terraform/"$tf_version"/terraform_"$tf_version"_linux_amd64.zipunzip terraform_"$tf_version"_linux_amd64.zipsudo mv terraform /usr/local/bin/- name: Verify Terraform versionrun: terraform --version- name: Terraform initenv:AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}run: terraform init -input=false- name: Terraform applyenv:AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}run: terraform apply -auto-approve -input=false

This pipeline will checkout the code in container and fetch the secrets from the Github secrets and do the terraform init and apply.

Looking into the tree, below is my folder structure, where terraform_deploy is my main folder and inside we have all the required files.

terraform_deploy

│ main.tf

│ providers.tf

│ README.md

│ variables.tf

│ versions.tf

│

└───.github

└───workflows

TerraformApply.yml

TerraformTest.yml

Lets create a new branch and push the code to Github repo, Here is my Github repo for your reference. Once you push the code to the new branch of the Github

repo, you can observe Terraform Test pipeline will start running.

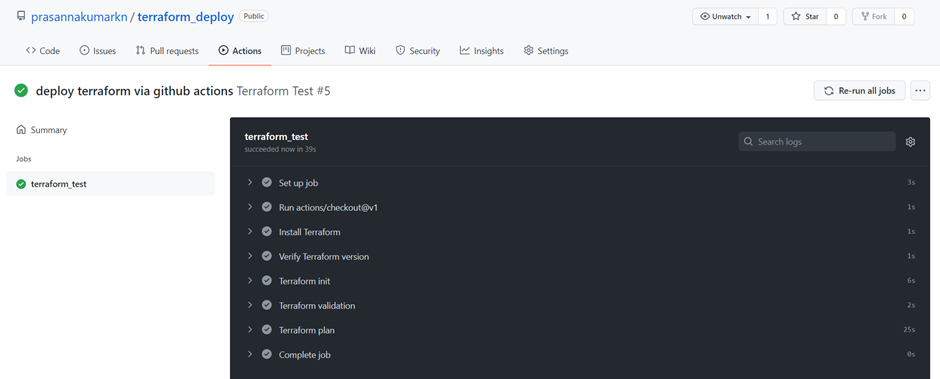

Terraform test is Green and you can review the terraform states in the job.

Terraform test is Green and you can review the terraform states in the job.

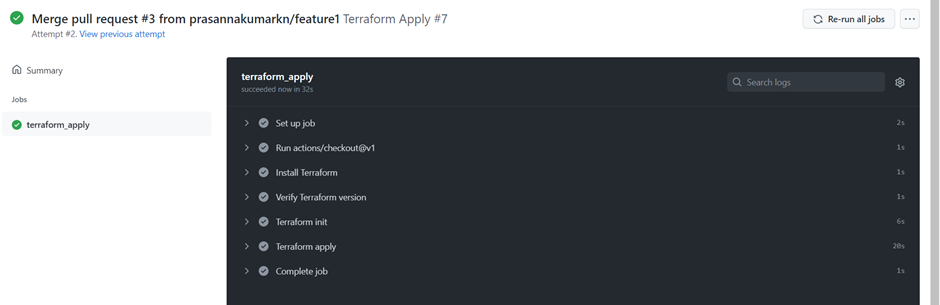

Let's merge the code to main branch using Pull request, now we can observe, after code is merged to main branch, Terraform apply pipeline will runs and create the S3 bucket in AWS

Conclusion

Thank you for reading!

Comments

Post a Comment